Kubernetes provides the perfect orchestration platform to manage containerized applications at scale. However, these deployed applications have no external access by default unless explicitly configured. Kubernetes offers multiple ways such as ClusterIP, NodePort and Ingress to connect to the cluster. Each of these methods is suited for different use cases. In this article, we will explore Ingress and how to utilize it to provide external access to applications running within a Kubernetes cluster.

What is Kubernetes Ingress?

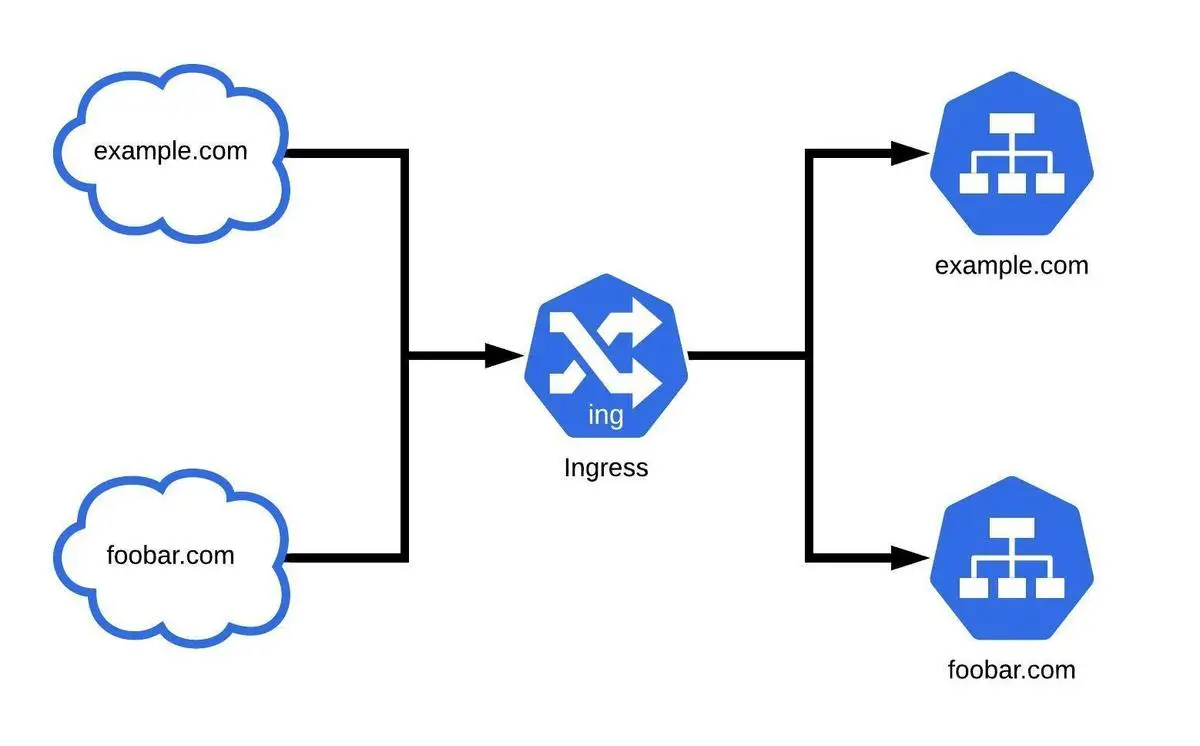

Kubernetes ingress is an API object that manages external access to the Kubernetes cluster. Ingress only exposes HTTP and HTTPS traffic from external sources and routes it to services within the cluster. Ingress does not directly connect to Pods as it relies on services for connectivity within the cluster. Thus, if you are facing an issue like “503 service unavailable error”, you still need to start debugging from the service itself as Pods are connected to the service.

Ingress allows users to provide externally reachable URLs to access services, terminate SSL/TLS, and facilitate name-based virtual hosting and load balancing. All these things are done through a component called the ingress controller. The available functionality and customizability depend on the ingress controller. Kubernetes provides an extensive array of ingress controllers that users can utilize to provide ingress. However, users need to rely on other methods like NodePort to expose different ports or protocols, as ingress only handles HTTP and HTTPS traffic.

What is an Ingress Controller in Kubernetes?

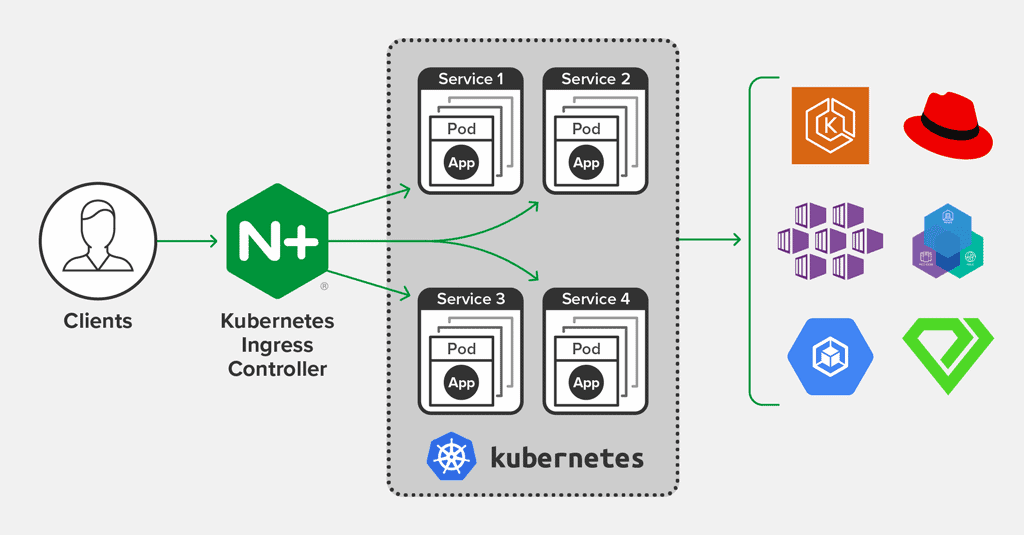

Ingress controller powers the functionality of ingress. However, we need to configure ingress controllers as they do not come by default, unlike other Kubernetes controllers which run as a part of the Kube-controller-manager.

The Kubernetes project itself supports AWS, GCE, and Nginx ingress controllers while other controllers such as AKS, HAProxy, and Traefik are available as third-party controllers. Kubernetes also enables deploying multiple ingress controllers within the same cluster. The only requirement for using multiple ingress controllers is to properly configure the ingressClassName for the ingress object. Otherwise, Kubernetes will automatically apply the default ingressClass configured in the cluster.

Implementing Ingress to Facilitate External Connectivity

Users need to first install the relevant ingress controllers in the cluster to facilitate ingress. The exact installation and configuration will depend on the ingress controller and Kubernetes cluster environment. For example, deploying a Nginx ingress controller on a bare-metal Kubernetes cluster can be vastly different from deploying it in a cloud environment like AWS. Furthermore, it may require configuring additional resources like load balancers on top of the ingress controller.

The next step is to configure the ingress itself. As with any other Kubernetes configuration, it also comes in the form of a YAML file. Ingress depends on user-defined rules to route traffic to the relevant services. All incoming traffic is matched against the rules defined in the ingress and routed to the matching service.

An ingress rule consists of the following:

- Host – It determines for which hosts the underlying rule applies. When you specify this optional field, any traffic that matches the given host (URL) will be matched against the rules configured for that host. When there are no hosts configured for a rule, it applies to all incoming traffic.

- Paths – The path of the request. Both the host and the path should match to route the traffic to the relevant service.

- Backend – The service and port to which the request should be routed to. In addition to services, users can specify resource backends such as storage buckets that point to static content.

Users must determine what type of ingress to implement before implementing ingress rules. It solely depends on user requirements. Users can simply define a default backend without any rules to expose a single service, as all traffic will be automatically routed to that default backend. The simple fan out strategy can be used to route traffic to multiple services. In the meantime, we can use a single IP with rules that match the specified paths for routing to different services. Name-based virtual hosting can be utilized, with rules created under multiple hosts to route traffic to different services. It helps facilitate more complex configurations, especially when there are multiple apps deployed in a cluster.

Ingress can be further augmented with multiple ingress controllers as well as external resources. These external resources include load balancers and firewalls that sit outside the cluster to further control and manage the inbound traffic to the cluster. In most cases, a single ingress controller can facilitate all the external connectivity requirements by carefully assessing the services that need external support.

Conclusion

Kubernetes Ingress provides users with the means to allow external HTTP and HTTPS traffic to reach internal cluster services via a single entry point in the cluster. Not only does it simplify the management of external access, but also ensures better security of the cluster by eliminating the need to configure different entry points for different services.